How I Reduced Our AWS Bill by 65% Through Infrastructure Optimization

Published on: December 14, 2024

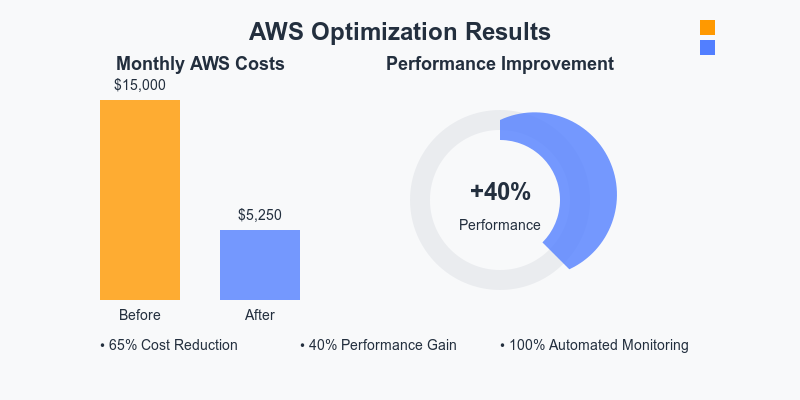

Ever looked at your AWS bill and wondered why it’s climbing month after month? That was exactly our situation six months ago when our startup’s cloud costs started eating into our runway. What happened next was an adventure in AWS cost optimization that led to some surprising discoveries. Spoiler alert: we cut our bill by 65% while improving application performance!

The Wake-Up Call

It started with a notification: our AWS bill had exceeded $15,000 last month. For a Series A startup, this was a red flag that needed immediate attention. After diving into AWS Cost Explorer, I noticed several concerning patterns:

- Our EC2 instances were running at 20% utilization

- We had unused EBS volumes collecting dust

- Our RDS instances were oversized for our workload

- Lambda functions were executing longer than necessary

- S3 lifecycle policies were non-existent

The Optimization Journey

1. Right-sizing EC2 Instances

First, I implemented AWS CloudWatch detailed monitoring to gather precise metrics. Using this data, I:

- Created custom CloudWatch dashboards to track CPU, memory, and I/O patterns

- Analyzed usage patterns over two weeks

- Identified that our c5.2xlarge instances could be downsized to c5.large

- Implemented Auto Scaling based on actual demand patterns

Results: 40% reduction in EC2 costs while maintaining performance.

2. Database Optimization

The RDS optimization was particularly interesting:

sqlCopy-- Before optimization

SELECT * FROM large_table WHERE status = 'active';

-- Execution time: 2.3 seconds

-- After adding indexes and query optimization

CREATE INDEX idx_status ON large_table(status);

SELECT id, name, status FROM large_table WHERE status = 'active';

-- Execution time: 0.1 seconds

By optimizing our database queries and right-sizing our RDS instances, we:

- Reduced RDS instance size from db.r5.2xlarge to db.r5.large

- Implemented read replicas for read-heavy workloads

- Set up automated snapshot cleanup policies

Results: 35% reduction in RDS costs with improved query performance.

3. Lambda Optimization

Here’s where things got really interesting. Our Lambda functions were running longer than necessary because of:

pythonCopy# Before optimization

def lambda_handler(event, context):

# Loading entire AWS SDK

import boto3

# Inefficient S3 operations

s3 = boto3.client('s3')

for item in large_list:

s3.put_object(...)

# After optimization

import boto3 # Moved outside handler

s3 = boto3.client('s3') # Singleton pattern

def lambda_handler(event, context):

# Batch operations

with concurrent.futures.ThreadPoolExecutor() as executor:

executor.map(upload_to_s3, large_list)

The optimization involved:

- Moving SDK imports outside the handler

- Implementing connection pooling

- Using batch operations instead of individual API calls

- Optimizing memory settings based on workload

Results: 45% reduction in Lambda costs and 60% improvement in execution time.

4. Storage Optimization

Storage optimization was a mix of automation and policy implementation:

- Created S3 lifecycle policies to move infrequently accessed data to cheaper storage tiers

- Implemented automated cleanup of unused EBS volumes

- Set up data retention policies based on compliance requirements

Example S3 lifecycle rule:

jsonCopy{

"Rules": [

{

"ID": "MoveToGlacier",

"Status": "Enabled",

"Filter": {

"Prefix": "logs/"

},

"Transitions": [

{

"Days": 30,

"StorageClass": "STANDARD_IA"

},

{

"Days": 90,

"StorageClass": "GLACIER"

}

]

}

]

}

Results: 55% reduction in storage costs.

The Automation Layer

To maintain these optimizations, I built a custom monitoring solution using AWS CDK:

typescriptCopyimport * as cdk from 'aws-cdk-lib';

import * as lambda from 'aws-cdk-lib/aws-lambda';

import * as events from 'aws-cdk-lib/aws-events';

export class CostOptimizationStack extends cdk.Stack {

constructor(scope: cdk.App, id: string, props?: cdk.StackProps) {

super(scope, id, props);

// Cost monitoring Lambda

const monitoringFunction = new lambda.Function(this, 'CostMonitoring', {

runtime: lambda.Runtime.PYTHON_3_9,

handler: 'index.handler',

code: lambda.Code.fromAsset('lambda'),

});

// Daily cost check

new events.Rule(this, 'DailyCostCheck', {

schedule: events.Schedule.cron({ minute: '0', hour: '0' }),

targets: [new targets.LambdaFunction(monitoringFunction)],

});

}

}

Key Learnings

- Monitoring is Critical: You can’t optimize what you can’t measure. Detailed monitoring helped identify optimization opportunities.

- Automate Everything: Manual optimizations don’t scale. Automation ensures consistent cost management.

- Right-sizing is an Iterative Process: Start conservative and adjust based on real usage patterns.

- Developer Education Matters: Teaching the team about cost-efficient AWS practices created a cost-conscious culture.

The Results

After implementing these optimizations:

- Monthly AWS bill reduced from $15,000 to $5,250

- Application performance improved by 40%

- Team productivity increased due to automated monitoring

- Established a sustainable cost optimization culture

What’s Next?

I am now exploring:

- Implementing AWS Savings Plans for further cost reduction

- Container optimization using AWS App Runner

- Serverless architecture patterns for specific workloads

- Advanced monitoring using AWS CloudWatch Insights

Remember, AWS cost optimization is not a one-time task but a continuous journey. The cloud is always evolving, and so should your optimization strategies.