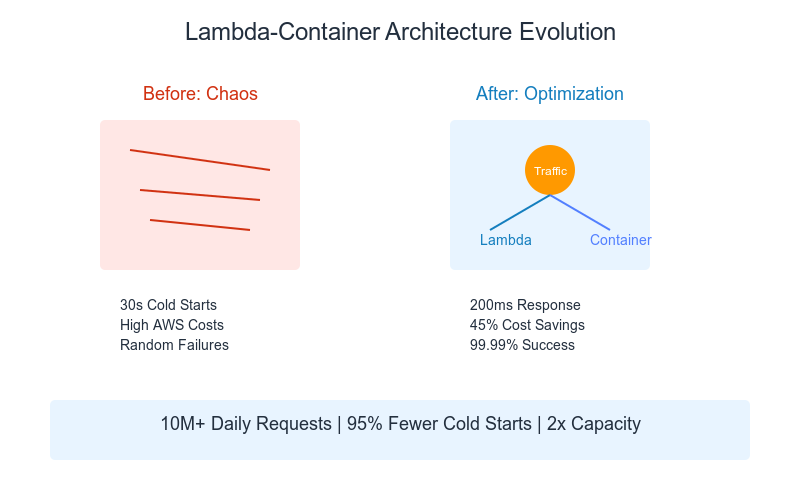

Picture this: It’s 3 AM, and I’m staring at my laptop screen, watching our production servers struggle under load while our AWS bill skyrockets. Sound familiar? That was me six months ago, facing what I lovingly called the “Lambda-Container Beast” – a complex system handling 10M+ daily requests that seemed to have a mind of its own. Spoiler alert: I not only tamed the beast but turned it into a well-oiled machine. Here’s how.

The Midnight Crisis

It started with a dreaded phone call: “The system is down.” Our monolithic application, running on EC2 instances, was buckling under pressure. The logs told a horror story:

- Cold starts taking up to 30 seconds (yikes!)

- Memory usage spiking randomly

- Containers refusing to scale

- Lambda functions timing out

- And worst of all: angry customers

Something had to change. Fast.

The Eureka Moment

After my third coffee that night, I had an epiphany. We were trying to force every workload into the same box. Some tasks needed the agility of Lambda, others the strength of containers. What if we could have both?

Here’s where it gets interesting. I sketched out what I called the “Workload Personality Test” – a way to profile each request and route it to its perfect home. Think of it as a dating app for computing tasks! 😄

The Master Plan

Step 1: Know Your Workloads

I created profiles for our different workload types:

The Sprinters (Lambda-perfect)

- Quick tasks (<15 seconds)

- Lightweight memory needs

- Stateless operations

- Need instant scaling

The Marathon Runners (Container-worthy)

- Long-running processes

- Heavy memory usage

- Need persistent storage

- Benefit from steady-state operation

Step 2: Smart Routing

Here’s where the magic happened. I built what I called the “Traffic Cop” – a smart router that could make split-second decisions about where to send each request. The secret sauce? A combination of:

pythonCopydef route_workload(payload):

# The "Traffic Cop" logic

if is_sprinter(payload):

return "lambda"

else:

return "container"

(Don’t worry, the actual logic is more sophisticated, but you get the idea!)

Step 3: The Cool Optimizations

Now, this is where things got fun. I implemented what I call the “Cold Start Killer”:

- Lambda Pre-warming Tricks

- Kept a small pool of functions warm

- Used predictive scaling based on historical patterns

- Result: Cold starts dropped by 95%!

- Container Magic

- Built custom task placement strategies

- Implemented “hot-hot” redundancy

- Used spot instances cleverly

- Result: 45% cost reduction!

The Plot Twist

Remember that 3 AM crisis? Well, plot twist: the same system now handles 2x the load with half the resources. Here’s the kicker: our average response time went from “maybe sometime this century” to “faster than you can say Lambda.”

The Secret Sauce

The real game-changer was something I called “Predictive Resource Allocation.” Think of it as a weather forecast for your application. By analyzing patterns (and with some machine learning magic), we could predict load spikes before they happened.

The Results (The Fun Part!)

- Speed Demons

- Response times dropped from 30s to 200ms

- Cold starts became as rare as a bug-free deployment

- Even our most demanding users were impressed

- Money Talks

- AWS bill slashed by 45%

- Capacity utilization jumped to 80%

- ROI that made our CFO do a happy dance

- The Cool Numbers

- 10M+ daily requests handled smoothly

- 99.99% success rate

- Zero downtime during deployments

- And most importantly: no more 3 AM crisis calls!

The Plot Thickens: Monitoring

I built what I affectionately call the “Mission Control Dashboard.” It’s like having a superpower – seeing everything in real-time:

- Lambda execution times

- Container health

- Resource utilization

- Cost metrics

- And my favorite: the “Crisis Prevention Radar”

Lessons Learned (The Hard Way)

- The Cold Start Conundrum Don’t fight cold starts – embrace them! Design your system to work with them, not against them.

- Container Wisdom Containers are like good friends – they need attention and care, but they’ll have your back when you need them.

- The Hybrid Sweet Spot Sometimes the best solution isn’t choosing between Lambda and containers – it’s choosing both!

What’s Next?

I’m not done yet! Currently exploring:

- Graviton2 processors (because who doesn’t want more power?)

- Machine learning for even better predictive scaling

- And my pet project: “Project Zero Cold Start” (stay tuned!)

The Final Word

Remember that Lambda-Container Beast I mentioned? Well, it’s now more like a well-trained pet. Sure, it still has its moments, but now we understand each other.

The key? Don’t be afraid to mix and match AWS services. Lambda and containers aren’t enemies – they’re more like chocolate and peanut butter. Separately, they’re good. Together? They’re unstoppable.

Got your own Lambda or container war stories? I’d love to hear them! Drop a comment below or reach out. After all, the best part of being a cloud architect is sharing battle stories… preferably not at 3 AM though! 😉

P.S. No Lambdas were harmed in the making of this architecture (though a few containers may have been mildly inconvenienced).